Elements of Machine Learning (COMPSCI 309)

Spring 2023-2024 / Session 4 (7 weeks, 35 hours)

Course Period: March 18 - May 3, 2024

- Lectures: Tuesday / Thursday @ 14:45-17:15 (Classroom: IB 1056 + Zoom)

Machine Learning (ML) is a popular field with interdisciplinary characteristics relating to various fields including Computer Science, Mathematics and Statistics. ML aims at learning without being explicitly programmed, through data and experience. The target applications are the complex tasks which are challenging, impractical or unrealistic to program. ML can be used to address those sophisticated activities that humans or animals can routinely do such as speech recognition, image understanding and driving. The other functions to learn that ML concentrates on, are concerned with the ones requiring capabilities beyond human capacities, in terms of speed and memory.

This course covers theoretical and practical issues in modern machine learning techniques. Topics considered include statistical foundations, supervised and unsupervised learning, decision trees, hidden Markov models, neural networks, and reinforcement learning. The course will require basic programming skills (Python) and introductory level knowledge on calculus, probability and statistics besides benefiting from certain linear algebra concepts.

By the end of this course, you will be able to:

- specify a given learning task as a ML problem

- determine the appropriate ML algorithms for addressing an ML problem

- manipulate the given data concerned with a learning task so that the preferred ML algorithm can be effectively applied

- construct generalizable ML models that can address a given ML problem of unseen data

- analyze the performance of the ML algorithms while revealing their shortcomings referring to the nature of the data

- build complete ML workflows in Python together with the relevant libraries / frameworks / tools besides effectively communicating your methods and results using Jupyter notebooks

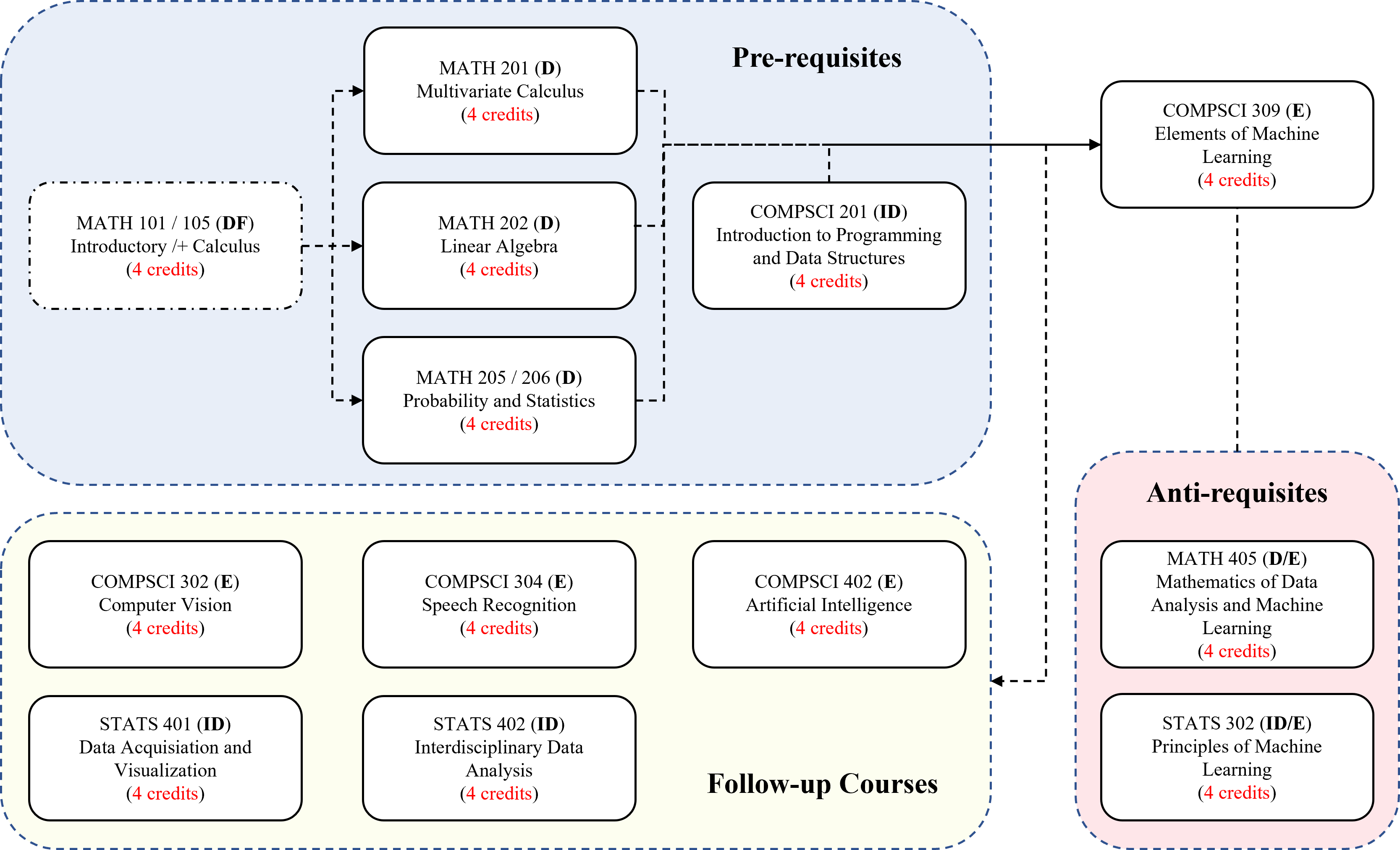

The chart, on the right, shows how COMPSCI 309 fits to the DKU curriculum, where the abbreviations indicate the course types, i.e. D: Divisional, DF: Divisional Foundation, ID: Interdisciplinary and E: Elective. Refer to the DKU Undergraduate Bulletin for more details.

Pre-requisites

- MATH 201: Multivariable Calculus

- MATH 202: Linear Algebra

- MATH 205 / 206: Probability and Statistics

- COMPSCI 201: Introduction to Programming and Data Structures

Anti-requisites

- MATH 405: Mathematics of Data Analysis and Machine Learning

- STATS 302: Principles of Machine Learning

There is no official textbook for this course. Still, the following books can be used as references.

Reference Books

- Introduction to Statistical Learning, Gareth James, Daniela Witten, Trevor Hastie, Rob Tibshirani (1st / 2nd Edition), 2017 (Corrected 7th Printing) / 2021, Springer (Free Book) [ Slides and Videos ]

- Pattern Recognition and Machine Learning, Christopher Bishop (1st Edition), 2006, Springer (Free Book) [ Solution Manual (2009) ]

- Probabilistic Machine Learning: An Introduction, Kevin P. Murphy (1st Edition), 2021, MIT Press (Free Book)

- Machine Learning: A Probabilistic Perspective, Kevin P. Murphy (1st Edition), 2012, MIT Press (Free Book)

- Algorithms for Decision Making, Mykel J. Kochenderfer, Tim A. Wheeler, Kyle H. Wray (1st Edition), 2022, MIT Press (Free Book)

- Gaussian Processes for Machine Learning, Carl Edward Rasmussen, Christopher K. I. Williams (1st Edition), 2006, MIT Press (Free Book)

- Probabilistic Graphical Models, Daphne Koller, Nir Friedman (1st Edition), 2009, MIT Press

- Machine Learning, Zhi-Hua Zhou (1st Edition), 2021, Springer

- Introduction to Machine Learning, Ethem Alpaydin (3rd Edition), 2014, MIT Press

- Understanding Machine Learning: From Theory to Algorithms, Ehai Shalev-Shwartz, Shai Ben-David (1st Edition), 2014, Cambridge University Press (Free Book)

- Learning from Data, Yaser S. Abu-Mostafa, Malik Magdon-Ismail, Hsuan-Tien Lin (1st Edition), 2012, AMLBook

- Machine Learning: an Algorithmic Perspective, Stephen Marshland (2nd Edition), 2015, CRC Press

- Machine Learning Refined: Foundations, Algorithms, and Applications, Jeremy Watt, Reza Borhani, Aggelos K. Katsaggelos (1st Edition), 2016, Cambridge University Press

- Foundations of Machine Learning, Mehryar Mohri, Afshin Rostamizadeh, Ameet Talwalkar (2nd Edition), 2018, MIT Press (Free Book)

- Machine Learning, Tom Mitchell (1st Edition), 1997, McGraw Hill Press

- A Course in Machine Learning, Hal Daume III (2nd Edition), 2017 (Free Book)

- A First Course in Machine Learning, Simon Rogers, Mark Girolami (2nd Edition), 2017, Chapman and Hall/CRC Press [ Source Code ]

- Machine Learning: A First Course for Engineers and Scientists, Andreas Lindholm, Niklas Wahlstrom, Fredrik Lindsten, Thomas B. Schon (1st Edition), 2022, Cambridge University Press (Free Book) [ Lecture Slides ]

- Introduction to Machine Learning, Alex Smola, S.V.N. Vishwanathan (1st Edition), 2008, Cambridge University Press (Free Book)

- Patterns, Predictions, and Actions: A Story about Machine Learning, Moritz Hardt, Benjamin Recht (1st Edition), 2022, Princeton University Press (Free Preprint)

- Machine Learning Fundamentals: A Concise Introduction, Hui Jiang (1st Edition), 2022, Cambridge University Press

- Machine Learning: A Concise Introduction, Steven W. Knox (1st Edition), 2018, Wiley

- Machine Learning for Engineers, Osvaldo Simeone (1st Edition), 2022, Cambridge University Press

- Bayesian Reasoning and Machine Learning, David Barber (1st Edition), 2012/2020, Cambridge University Press (Free Book)

- An Elementary Introduction to Statistical Learning Theory, Sanjeev Kulkarni, Gilbert Harman (1st Edition), 2011, Wiley

- All of Statistics: A Concise Course in Statistical Inference, Larry Wasserman (1st Edition), 2004, Springer [ Datasets ]

- Applied Predictive Modeling, Max Kuhn, Kjell Johnson (2nd Edition), 2018, Springer

- The Hundred-Page Machine Learning Book, Andriy Burkov, 2019 (Free Book - Draft Version)

- Machine Learning Mastery With Python, Jason Brownlee, 2016

- Reinforcement Learning: an Introduction, Richard S. Sutton ve Andrew G. Barto (2nd Edition), 2020, MIT Press (Free Book)

- Algorithms for Reinforcement Learning, Csaba Szepesvari (1st Edition), 2010, Morgan and Claypool (Free Book)

- Foundations of Deep Reinforcement Learning: Theory and Practice in Python, Laura Graesser, Wah Loon Keng (1st Edition), 2019, Addison-Wesley [ Source Code ]

- Artificial Intelligence: With an Introduction to Machine Learning, Richard E. Neapolitan, Xia Jiang (2nd Edition), 2018, CRC Press

- Applying Reinforcement Learning on Real-World Data with Practical Examples in Python, Philip Osborne, Kajal Singh, Matthew E. Taylor, 2022, Springer (Free Book)

- Machine Learning from Weak Supervision, Masashi Sugiyama, Han Bao, Takashi Ishida, Nan Lu, Tomoya Sakai and Gang Niu (1st Edition), 2022, MIT Press

- The StatQuest Illustrated Guide To Machine Learning, Josh Starmer (1st Edition), 2022 [ Video Lectures ]

- Machine Learning Q and AI: 30 Essential Questions and Answers on Machine Learning and AI, Sebastian Raschka (1st Edition), 2024, No Starch Press [ Code Repository ]

- The Art of Feature Engineering: Essentials for Machine Learning, Pablo Duboue (1st Edition), 2020, Cambridge University Press

- Feature Engineering and Selection: A Practical Approach for Predictive Models, Max Kuhn, Kjell Johnson (1st Edition), 2021, Chapman & Hall/CRC Press

- Feature Engineering for Machine Learning: Principles and Techniques for Data Scientists, Alice Zheng, Amanda Casari (1st Edition), 2018, O'Reilly Press

- Machine Learning Yearning, Andrew Ng (1st Edition), 2020, deeplearning.ai (Free Book - Draft Version)

- Machine Learning: The New AI, Ethem Alpaydin (1st Edition), 2016, MIT Press

- Machine Learning Engineering, Andriy Burkov (1st Edition), 2020, True Positive Inc. (Free Book)

- Deep Learning with Python, Francois Chollet (2nd Edition), 2021, Manning Publications (Free Book) [ Source Code ]

- Deep Learning, Ian Goodfellow, Yoshua Bengio, Aaron Courville (1st Edition), 2016, MIT Press (Free Book)

- Deep Learning: Foundations and Concepts, Christopher M. Bishop, Hugh Bishop (1st Edition), 2024, Springer (Free Book)

- Neural Networks and Deep Learning, Michael Nielsen, 2019 (Free Book)

- Neural Networks and Deep Learning: A Textbook, Charu C. Aggarwal (1st Edition), 2018, Springer

- Machine Learning with Neural Networks: an Introduction for Scientists and Engineers, Bernhard Mehlig (1st Edition), 2022, Cambridge University Press

- Learning Deep Learning: Theory and Practice of Neural Networks, Computer Vision, NLP, and Transformers using TensorFlow, Magnus Ekman (1st Edition), 2021, Addison-Wesley [ Source Code - Cheat Sheets: 0, 1, 2, 3 ]

- Deep Learning for Vision Systems, Mohamed Elgendy (1st Edition), 2020, Manning Publications [ Source Code ]

- Inside Deep Learning: Math, Algorithms, Models, aff (1st Edition), 2022, Manning Publications [ Source Code ]

- Dive into Deep Learning, Aston Zhang, Zachary C. Lipton, Mu Li, and Alexander J. Smola, 2020 (Free Book)

- Understanding Deep Learning, Simon J.D. Prince (1st Edition), 2024 (Free Book) [ Code Repository ]

- The Science of Deep Learning, Iddo Drori (1st Edition), 2022, Cambridge University Press

- Neural Network Design, Martin T Hagan, Howard B Demuth, Mark H Beale, Orlando De Jesus (2nd Edition), 2014 (Free Book)

- Deep Learning for Natural Language Processing, Mihai Surdeanu, Marco Antonio Valenzuela-Escarcega (1st Edition), 2024, Cambridge University Press

- Mathematical Aspects of Deep Learning, Philipp Grohs, Gitta Kutyniok (1st Ediiton / Eds.), 2023, Cambridge University Press

- Artificial Intelligence and Causal Inference, Momiao Xiong (1st Edition), 2022, Chapman and Hall/CRC Press

- Concise Machine Learning, Jonathan Richard Shewchuk (Ed: May 3, 2022), 2022, UC Berkeley - ML Lecture Notes (Free Book)

- Advances in Deep Learning, M. Arif Wani, Farooq Ahmad Bhat, Saduf Afzal ve Asif Iqbal Khan, 2020, Springer

- Grokking Deep Learning, Andrew W. Trask (1st Edition), 2019, Manning Publications

- Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems, Aurelien Geron (2nd Edition), 2019, O'Reilly

- The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Trevor Hastie, Robert Tibshirani, Jerome Friedman (2nd Edition), 2009, Springer (Free Book)

- Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition, Dan Jurafsky, James H. Martin (3rd Edition), 2020, Prentice Hall (Free Book)

- Personalized Machine Learning, Julian McAuley (1st Edition), 2022, Cambridge University Press

- A Hands-On Introduction to Machine Learning, Chirag Shah (1st Edition), 2023, Cambridge University Press

- Designing Machine Learning Systems: An Iterative Process for Production-Ready Applications, Chip Huyen (1st Edition), 2022, O'Reilly Press

- Distributed Machine Learning Patterns, Yuan Tang (1st Edition), 2024, Manning Publications [ Code Repository ]

- Machine Learning Theory and Applications: Hands-on Use Cases with Python on Classical and Quantum Machines, Xavier Vasques (1st Edition), 2024, Wiley

- The Statistical Physics of Data Assimilation and Machine Learning, Henry D. I. Abarbanel (1st Edition), 2022, Cambridge University Press

- Ensemble Methods: Foundations and Algorithms, Zhi-Hua Zhou (1st Edition), 2012, CRC Press

- The AI Playbook: Mastering the Rare Art of Machine Learning Deployment, Eric Siegel (1st Edition), 2024, MIT Press

- Data-Driven Fluid Mechanics: Combining First Principles and Machine Learning, Miguel A. Mendez, Andrea Ianiro, Bernd R. Noack, Steven L. Brunton (1st Edition), 2023, Cambridge University Press

- Probabilistic Machine Learning for Civil Engineers, James-A. Goulet (1st Edition), 2020, MIT Press

- Mathematics for Machine Learning, Marc Peter Deisenroth, A. Aldo Faisal, Cheng Soon Ong (1st Edition), 2020, Cambridge University Press (Free Book)

- Linear Algebra and Optimization for Machine Learning: A Textbook, Charu C. Aggarwal (1st Edition), 2020, Springer

- Optimization for Machine Learning, Suvrit Sra, Sebastian Nowozin, Stephen J. Wright (Edited - 1st Edition), 2011, MIT Press

- Convex Optimization, Stephen Boyd, Lieven Vandenberghe (1st Edition), 2004, Cambridge University Press (Free Book)

- An Introduction to Optimization: With Applications in Machine Learning and Data Analytics, Jeffrey Paul Wheeler (1st Edition), 2023, CRC Press

- Data Mining: Concepts and Techniques, Jiawei Han, Micheline Kamber, Jian Pei (3rd Edition), 2012, Morgan Kaufmann

- Data Mining and Analysis: Fundamental Concepts and Algorithms, Mohammed J. Zaki ve Wagner Meira, Jr. (1st Edition), 2014, Cambridge University Press (Free Book)

- Data Mining: Practical Machine Learning Tools and Techniques, Ian H. Witten, Eibe Frank, Mark A. Hall, Christopher J. Pal (4th Edition), 2016, Morgan Kaufmann Press

- Data Mining: The Textbook, Charu C. Aggarwal (1st Edition), 2015, Springer Press

- Big Data and Social Science: Data Science Methods and Tools for Research and Practice, Ian Foster, Rayid Ghani, Ron S. Jarmin, Frauke Kreuter, Julia Lane (2nd Edition - Ed.), 2020, CRC Press (Free Book)

- Data Clustering, Chandan K. Reddy, Charu C. Aggarwal (1st Edition - Ed.), 2014, CRC Press (Free Book)

- Data Cleaning, Ihab F. Ilyas, Xu Chu (1st Edition), 2019, ACM

- Image Processing and Machine Learning, Volume 1: Foundations of Image Processing, Erik Cuevas, Alma Nayeli Rodriguez (1st Edition), 2024, CRC Press

- Image Processing and Machine Learning, Volume 2: Advanced Topics in Image Analysis and Machine Learning, Erik Cuevas, Alma Nayeli Rodriguez (1st Edition), 2024, CRC Press

Lecture Notes / Slides

- Week 0 (Reviews)

- Linear Algebra

- External Review: CS229: Machine Learning (Zico Kolter++ Stanford U) - Linear Algebra

- External Review (+ Lecture Videos): Linear Algebra Review (Zico Kolter, CMU) - Linear Algebra

- Book Chapter: Deep Learning by Ian Goodfellow, Yoshua Bengio, Aaron Courville (2016), Chapter 2 - Linear Algebra

- Probability & Statistics

- External Course: CS109: Probability for Computer Scientists (Alex Tsun and Tim Gianitsos, Stanford U)

- Free Book: Probability & Statistics with Applications to Computing by Alex Tsun

- External Review: CS229: Machine Learning (Arian Maleki and Tom Do, Stanford U) - Probability Theory

- Book Chapter: Deep Learning by Ian Goodfellow, Yoshua Bengio, Aaron Courville (2016), Chapter 3 - Probability

- Week 1 [18/03 - 21/03] (Keywords: History, Terminology and Basics; Supervised Learning; Regression Problem; Gradient Descent)

- About COMPSCI 309

- Introduction to Machine Learning

- Article: Jordan, M.I., 2019. Artificial intelligence-the revolution hasn't happened yet. Harvard Data Science Review, 1(1)

- Article: Jordan, M.I. and Mitchell, T.M., 2015. Machine learning: Trends, perspectives, and prospects. Science, 349(6245), pp.255-260

- Article: Breiman, L., 2001. Statistical modeling: The two cultures. Statistical Science, 16(3), pp.199-231

- Article: Minsky, M., 1961. Steps Toward Artificial Intelligence. Proceedings of the IRE, 49(1), pp.8-30

- Video: Shakey the Robot: The First Robot to Embody Artificial Intelligence (1966-1972) by SRI International

- Learning Problem

- External Video: CS156: Learning Systems (Yaser Abu-Mostafa, Caltech) - Learning Problem

- Linear Regression

- External Lecture Notes: CS229: Machine Learning (Andrew Ng, Stanford U) [Part I] - Linear Regression

- External Video: CS229: Machine Learning (Andrew Ng, Stanford U) - Linear Regression

- Over / Under-fitting

- Book Chapter: Introduction to Statistical Learning by Gareth James, Daniela Witten, Trevor Hastie, Rob Tibshirani (2017), Chapter 2.2.2 - Bias-Variance Trade-Off

- Book Chapter: Introduction to Statistical Learning by Gareth James, Daniela Witten, Trevor Hastie, Rob Tibshirani (2017), Chapter 6.2 - Shrinkage Methods

- Recitation / Lab: Google Colab, Python (+ NumPy, Matplotlib), scikit-learn, Linear Regression

- Week 2 [25/03 - 28/03] (Keywords: Supervised Learning; Classification Problem; Regression Problem)

- Logistic Regression

- External Lecture Notes: CS229: Machine Learning (Andrew Ng, Stanford U) [Part II] - Logistic Regression

- External Video: CS229: Machine Learning (Andrew Ng, Stanford U) - Logistic Regression

- k-Nearest Neighbors (kNN)

- External Lecture Notes: CS4780: Machine Learning for Intelligent Systems (Kilian Weinberger, Cornell U) - kNN

- External Video: CS4780: Machine Learning for Intelligent Systems (Kilian Weinberger, Cornell U) - kNN

- Naive Bayes

- Review: Deep Learning by Ian Goodfellow, Yoshua Bengio, Aaron Courville, Chapter 3 - Probability

- Article: Hand, D.J. and Yu, K., 2001. Idiot's Bayes-not so stupid after all?. International statistical review, 69(3), pp.385-398

- External Lecture Notes: CS4780: Machine Learning for Intelligent Systems (Kilian Weinberger, Cornell U) - Bayes Classifier and Naive Bayes

- External Video: CS4780: Machine Learning for Intelligent Systems (Kilian Weinberger, Cornell U) - Naive Bayes

- Recitation / Lab: Pandas, Logistic Regression, kNN, Naive Bayes

- Homework 1: TBA

- Week 3 [01/04 - 05/04; 04/04 lecture is moved to 05/04] Artificial Neural Networks (Keywords: Supervised Learning; Feed-forward Neural Networks; Regression Problem; Classification Problem)

- Perceptrons

- Article (Optional / Historical): McCulloch, W.S. and Pitts, W., 1943. A logical calculus of the ideas immanent in nervous activity. The bulletin of mathematical biophysics, 5(4), pp.115-133

- Article (Optional / Historical): Rosenblatt, F., 1958. The perceptron: a probabilistic model for information storage and organization in the brain. Psychological review, 65 (6), p. 386

- Book Chapter: Neural Networks and Deep Learning by Michael Nielsen (2019), Chapter 1 - Perceptrons

- External Lecture Notes: CS4780: Machine Learning for Intelligent Systems (Kilian Weinberger, Cornell U) - Perceptrons

- External Video: CS4780: Machine Learning for Intelligent Systems (Kilian Weinberger, Cornell U) - Perceptrons

- Multi-layer Perceptrons (MLPs)

- External Lecture Notes: CS229: Machine Learning (Andrew Ng, Stanford U) - Backpropagation

- External Lecture Notes: CS229: Machine Learning (Andrew Ng, Stanford U) - Regularization and Model Selection

- External Demo: An Interactive Visualization of Neural Networks (Google): TensorFlow Playground

- Recitation / Lab: TensorFlow, Neural Networks

- Homework 2: TBA

- Week 4 [08/04 - 11/04] (Keywords: Supervised Learning; Regression Problem; Classification Problem)

- Decision Trees

- Book Chapter: Introduction to Statistical Learning by Gareth James, Daniela Witten, Trevor Hastie, Rob Tibshirani (2014), Chapter 8 - Tree-based Methods

- Ensembles: Bagging and Boosting

- Book Chapter: Pattern Recognition and Machine Learning by Christopher Bishop (2006), Chapter 14 - Combining Models

- Book Chapter: Introduction to Statistical Learning by Gareth James, Daniela Witten, Trevor Hastie, Rob Tibshirani (2017), Chapter 8.2 - Bagging, Random Forests, Boosting

- Support Vector Machines (SVM)

- External Lecture Notes: CS229: Machine Learning (Andrew Ng, Stanford U) [Part VI] - SVM

- External Video: CS229: Machine Learning (Andrew Ng, Stanford U) - SVM

- External Lecture Notes: CS4780: Machine Learning for Intelligent Systems (Kilian Weinberger, Cornell U) - SVM

- External Video: CS4780: Machine Learning for Intelligent Systems (Kilian Weinberger, Cornell U) - SVM

- Recitation / Lab: Decision Trees, Ensembles, SVM

- Homework 3: TBA

- MIDTERM (Date: TBA)

- Week 5 [15/04 - 18/04] (Keywords: Unsupervised Learning; Dimensionality Reduction; Clustering (+ Evaluation and Analysis))

- Dimensionality Reduction

- Principal Component Analysis (PCA)

- External Lecture Notes: CS229: Machine Learning (Andrew Ng, Stanford U) - PCA

- External Lecture Notes: CS4786: Machine Learning for Data Science (Karthik Sridharan, Cornell U) - PCA

- Clustering: k-means and k-medoids

- External Colab Practice: Google ML Crash Course with TensorFlow: Clustering Programming Exercise

- External Demo: Andrey A. Shabalin (U Utah): k-means Clustering

- Recitation / Lab: PCA, k-means

- Homework 4: TBA

- Week 6 [22/04 - 25/04] (Keywords: Sequential Data Models)

- Markov Chains (MCs)

- Book Chapter: Pattern Recognition and Machine Learning by Christopher Bishop (2006), Chapter 13.1 - Markov Models

- Book Chapter: Speech and Language Processing by Dan Jurafsky, James H. Martin (2023), Chapter 8.4.1 - Markov Chains

- Hidden Markov Models (HMMs)

- Book Chapter: Pattern Recognition and Machine Learning by Christopher Bishop (2006), Chapter 13.2 - HMMs

- Book Chapter: Speech and Language Processing by Dan Jurafsky, James H. Martin (2023), Chapter 8.4.2 - The Hidden Markov Model

- Markov Decision Processes (MDPs)

- Book Chapter: Reinforcement Learning: An Introduction by Richard Sutton and Andrew Barto (2020), Chapter 3 - Finite Markov Decision Processes

- Recitation / Lab: HMMs

- Homework 5: TBA

- Week 7 [29/04 - 02/05] (Keywords: Reinforcement Learning)

- Reinforcement Learning (RL)

- Book Chapter: Reinforcement Learning: An Introduction by Richard Sutton and Andrew Barto (2020), Chapter 1 - Introduction

- Book Chapter: Applying Reinforcement Learning on Real-World Data with Practical Examples in Python by P Osborne, K Singh, ME Taylor (2022), Chapter 1 - Basics and Definitions

- External Video: CS234: Reinforcement Learning (Emma Brunskill, Stanford U) - Introduction [ Lecture Slides ]

- Value and Policy Iterations

- Book Chapter: Applying Reinforcement Learning on Real-World Data with Practical Examples in Python by P Osborne, K Singh, ME Taylor (2022), Chapter 2.2.2 - Policy Improvement

- Model-based RL

- Book Chapter: Applying Reinforcement Learning on Real-World Data with Practical Examples in Python by P Osborne, K Singh, ME Taylor (2022), Chapter 2.2 - Model-based Methods

- Value Function Approximation

- External Video: CS234: Reinforcement Learning (Emma Brunskill, Stanford U) - Value Function Approximation [ Lecture Slides ]

- Recitation / Lab: RL

- Homework 6: TBA

- Project Presentations (Date: TBA)

- FINAL (Date: TBA)

HOLIDAY [04/04, Thursday]: Qing Ming - Tomb Sweeping Day (NO CLASSES) - Continue on [05/04, Friday]

HOLIDAY [01/05, Wednesday]: International Labor Day

Grading

- Homework: 20%

- Mathematical, Conceptual, or Programming related

- Submit on Sakai; 6 in total, the lowest score is dropped

- Weekly Journal: 10%

- Each week, write a page or so about what you have learned

- Submit on Sakai; 2 points off for each missing journal, capped at 10

- Midterm: 20%

- Final: 30%

- Project: 20%

- Report Rubrick (TBA)

- Presentation Rubrick (TBA)

Reference Courses

- CS229: Machine Learning (Stanford U.) [ Lecture Videos ]

- Coursera: Machine Learning by Andrew Ng (Stanford U.)

- CS4780: Machine Learning for Intelligent Systems by Kilian Weinberger (Cornell U.) [ Lecture Videos ]

- CS156: Learning Systems by Yaser S. Abu-Mostafa (Caltech) [ Learning from Data: Lecture Videos ]

Sample Projects

- CS229: Machine Learning (Stanford U.) - Final Projects (Fall 2004 - Fall 2023)

- CS230: Deep Learning (Stanford U.) - Final Projects (Fall 2018 - Fall 2022)

- CS231n: Deep Learning for Computer Vision (Stanford U.) - Final Projects (Spring 2022) [ Resources ]

Other Books

Quick / Easy Reads:- The Self-Assembling Brain: How Neural Networks Grow Smarter, Peter Robin Hiesinger (1st Edition), 2021, Princeton University Press

- Behind Deep Blue: Building the Computer That Defeated the World Chess Champion, JFeng-hsiung Hsu (2nd Edition), 2002 / 2022, Princeton University Press [ Video: Deep Blue | Down the Rabbit Hole ]

- AI Superpowers: China, Silicon Valley, and the New World Order, Kai-Fu Lee (1st Edition), 2018, Houghton Mifflin Harcour [ Video Lecture ]

- AI 2041: Ten Visions for Our Future, Kai-Fu Lee, Chen Qiufan (1st Edition), 2021, Currency

- Life 3.0: Being Human in the Age of Artificial Intelligence, Max Tegmark (1st Edition), 2018, Vintage [ Video Lecture ]

- Superintelligence: Paths, Dangers, Strategies, Nick Bostrom (1st Edition), 2014, Oxford University Press [ Video Lecture ]

- The Emotion Machine: Commonsense Thinking, Artificial Intelligence, and the Future of the Human Mind, Marvin Minsky (1st Edition), 2006, Simon & Schuster (Free Book)

- The Society of Mind, Marvin Minsky (1st Edition), 1988, Simon & Schuster [ Video Lectures: MIT 6.868J The Society of Mind (Fall 2011) ]

- Machines like Us: Toward AI with Common Sense, Ronald J. Brachman, Hector Levesque (1st Edition), 2022, MIT Press

- A Thousand Brains: A New Theory of Intelligence, Jeff Hawkins (2nd Edition), 2022, Basic Books [ Video Lecture ]

- The Myth of Artificial Intelligence: Why Computers Can't Think the Way We Do, Erik J. Larson (1st Edition), 2021, Belknap Press

- Genius Makers: The Mavericks Who Brought AI to Google, Facebook, and the World, Cade Metz (1st Edition), 2021, Dutton

- What Computers Still Can't Do: A Critique of Artificial Reason, Hubert L. Dreyfus (1st Edition), 1992, MIT Press

- A Brief History of Artificial Intelligence: What It Is, Where We Are, and Where We Are Going, Michael Wooldridge (1st Edition), 2021, Flatiron Books

- Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence, Kate Crawford (1st Edition), 2021, Yale University Press

- Linguistics for the Age of AI, Marjorie Mcshane, Sergei Nirenburg (1st Edition), 2021, MIT Press

- AI Assistants, Roberto Pieraccini (1st Edition), 2021, MIT Press

- How Humans Judge Machines, Cesar A. Hidalgo, Diana Orghian, Jordi Albo Canals, Filipa de Almeida, Natalia Martin (1st Edition), 2021, MIT Press

- Your Wit Is My Command: Building AIs with a Sense of Humor, Tony Veale (1st Edition), 2021, MIT Press

- Machine Hallucinations: Architecture and Artificial Intelligence, Neil Leach, Matias del Campo (1st Edition), 2022, Wiley

- The Future of the Professions: How Technology Will Transform the Work of Human Experts, Richard Susskind and Daniel Susskind (1st Edition), 2016, Oxford University

- How to Build Your Career in AI, Andrew Ng (1st Edition), 2022, DeepLearning.AI (Free Book)

Python Programming:

- Introducing Python for Computer Science and Data Scientists, Paul Deitel, Harvey Deitel (1st Edition), 2020, Pearson

- Introduction to Computation and Programming Using Python: With Application to Computational Modeling and Understanding Data, John V. Guttag (3rd Edition), 2021, MIT Press [ Source Code in Python ]

- Starting out with Python, Tony Gaddis (5th Edition), 2021, Pearson

- Python Programming and Numerical Methods: A Guide for Engineers and Scientists , Qingkai Kong, Timmy Siauw, Alexandre Bayen (1st Edition), 2020, Academic Press (Free Book)

- Think Python: How to Think Like a Computer Scientist, Allen B. Downey (2nd Edition), 2016, O'Reilly Press (Free Book)

- How to Think Like a Computer Scientist: Learning with Python 3, Peter Wentworth, Jeffrey Elkner, Allen B. Downey, Chris Meyers (3rd Edition), 2012 (Free Book)

- A Programmer's Guide to Computer Science (Vol. 1), William M. Springer II (1st Edition), 2019, Jaxson Media

- A Programmer's Guide to Computer Science (Vol. 2), William M. Springer II (1st Edition), 2020, Jaxson Media

- A Byte of Python, Swaroop C. H. (4th Edition), 2016 (Free Book)

- Project Python, Devin Balkcom, 2011 (Free Book)

- Python for Everybody: Exploring Data in Python 3, Charles Severance, 2016 (Free Book)

- Automate The Boring Stuff With Python, Al Sweigart (2nd Edition), 2019, No Starch Press (Free Book)

- Beyond the Basic Stuff with Python, Al Sweigart (1st Edition), 2020, No Starch Press (Free Book)

- Python Programming in Context, Bradley N. Miller, David L. Ranum, Julie Anderson (3rd Edition), 2019, Jones & Bartlett Learning

- Python Programming: An Introduction to Computer Science, John Zelle (3rd Edition), 2016, Franklin, Beedle & Associates

- A Hands-On, Project-Based Introduction to Programming, Eric Matthes (2nd Edition), 2016, No Starch Press (Free Book)

- Learn Python 3 the Hard Way, Zed A. Shaw (1st Edition), 2017, Addison-Wesley

- Introducing Python: Modern Computing in Simple Packages, Bill Lubanovic (2nd Edition), 2019, O'Reilly Press

- Clean Code in Python: Develop Maintainable and Efficient Code, Mariano Anaya (2nd Edition), 2021, Packt

- The Self-Taught Computer Scientist: The Beginner's Guide to Data Structures & Algorithms, Cory Althoff (1st Edition), 2021, Wiley

- The Big Book of Small Python Projects: 81 Easy Practice Programs, Al Sweigart (1st Edition), 2021, No Starch Press (Free Book)

- Invent Your Own Computer Games with Python, Al Sweigart (4th Edition), 2016, No Starch Press (Free Book)

- Cracking Codes with Python: An Introduction to Building and Breaking Ciphers, Al Sweigart (1st Edition), 2018, No Starch Press (Free Book)

Python Programming for Data Science / Analytics:

- Python Data Science Handbook: Essential Tools for Working with Data, Jake VanderPlas (1st Edition), 2017, O'Reilly Press

- Python for Data Analysis: Data Wrangling with Pandas, NumPy, and IPython, Wes McKinney (2nd Edition), 2017, O'Reilly Press

- Data Science from Scratch: First Principles with Python, Joel Grus (2nd Edition), 2019, O'Reilly Press

- Introduction to Machine Learning with Python: A Guide for Data Scientists, Andreas C. Muller, Sarah Guido (1st Edition), 2017, O'Reilly Press

Data Visualization:

- Data Visualization: A Practical Introduction, Kieran Healy (1st Edition), 2019, Princeton University Press

- Visualization Analysis and Design,Tamara Munzner (1st Edition), 2014, CRC Press

- Better Data Visualizations: A Guide for Scholars, Researchers, and Wonks, Jonathan Schwabish (1st Edition), 2021, Columbia University Press

- The Visual Display of Quantitative Information, Edward R. Tufte (2nd Edition), 2001, Graphics Press

- Fundamentals of Data Visualization - A Primer on Making Informative and Compelling Figures, Claus O. Wilke (1st Edition), 2019, O'Reilly Press (Free Book)

- Making Data Visual - A Practical Guide to Using Visualization for Insight, Danyel Fisher, Miriah Meyer (1st Edition), 2018, O'Reilly Press

- Storytelling with Data: A Data Visualization Guide for Business Professionals, Cole Nussbaumer Knaflic (1st Edition), 2015, Wiley

Other Materials / Resources

- Google Machine Learning Glossary

- An overview of Python Data Visualization libraries

- Philip W. L. Fong, 2009. Reading a Computer Science research paper. ACM SIGCSE Bulletin, 41(2), pp.138-140

- You and Your Research by Richard Hamming (Bell Labs / NPS). Bell Communications Research Colloquium Seminar, 7 March 1986

- An Online LaTeX Editor: Overleaf

- LaTeX Tutorial (Overleaf): Learn LaTeX in 30 minutes

- The Not So Short Introduction to LaTeX by Tobias Oetiker, Hubert Partl, Irene Hyna, Elisabeth Schlegl, 2021

- How To Speak / Present (Video) by Patrick Winston (MIT)